AI-Generated Motion: My Early Experience with Sora in the Car Design Workflow

There are few things more exciting — or more frustrating — than the first time a powerful new tool enters the designer’s workflow. That’s exactly how it feels working with Sora, the generative video AI developed by OpenAI. On its best days, Sora delivers visuals that are breathtaking: cinematic quality, fluid camera paths, and environmental realism that used to take entire rendering farms to achieve. On its worst days? It produces surreal morphs, flipped axles, or reverse-driving RVs with jelly-like tires.

As both a designer and visual storyteller, I’ve spent the last few months integrating Sora into my creative process — testing its limits, cheering its wins, and shaking my head when things went sideways (literally). This article reflects on that personal journey: what Sora is already doing for vehicle design and visualization, where it needs urgent improvement, and what the ideal version of a video AI assistant could look like in the modern design studio.

When It Works: Cinematic Brilliance at AI Speed

Let’s start with the good — because when Sora gets it right, it’s like watching your sketchbook come to life.

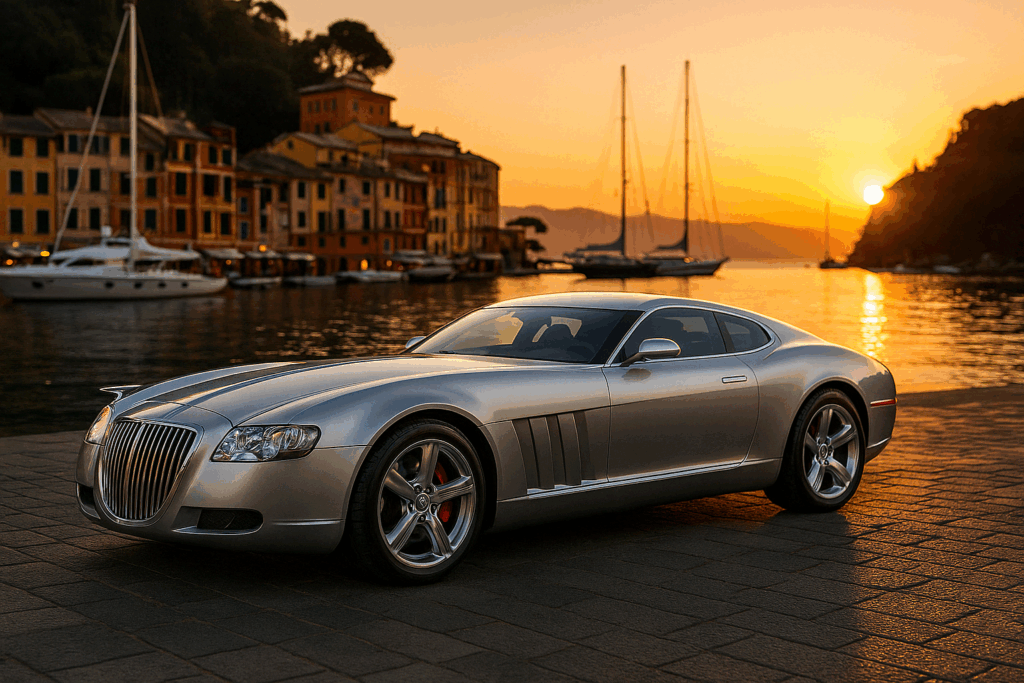

In several experiments, I’ve fed Sora a single still image of a concept vehicle — often rendered in VRED or composited against a background plate — and asked it to perform a simple dolly shot. In a few cases, Sora not only respected the lighting, design, and setting, but produced a video with:

- Stunning depth of field

- Accurate parallax

- Soft environmental motion (water ripple, cloud drift)

- Realistic lens and exposure behavior

The impact on early-phase storytelling is enormous. Instead of static presentation boards or hours spent keyframing camera paths in 3D software, I can now show a vehicle “in motion” — not driving, but breathing. It brings drama and emotion to concept reviews, investor decks, or even team brainstorming.

When It Doesn’t: Morphs, Glitches, and Wheel Weirdness

But Sora is far from production-ready — especially when it comes to technical fidelity.

Despite feeding it identical prompts, I often get completely different results — even with image references. One day I get a smooth tracking shot. The next? The car mutates mid-shot. Wheels stretch, rotate the wrong way, or disappear entirely. Camera movement becomes erratic, or the background flips into a fisheye nightmare. And perhaps most puzzling: front ends sometimes appear where the rear should be, or new bodywork is hallucinated out of nowhere.

These are not just “style” errors — they’re usability blockers for any professional designer hoping to depend on consistency and realism.

Even worse: the more detailed the prompt, the more likely the model is to misinterpret my intent. Ironically, in some cases, shorter prompts produce more usable results. But there’s no rule. Prompting Sora right now is equal parts writing, guessing, and praying.

It’s Not Just About Prompting

Some may say: “Just learn to prompt better.” But I’ve been through that. I’ve tried:

- Technical breakdowns (“camera starts low front 3/4, rises slowly to rear 3/4…”)

- Story-style scripts (“a silver RV glides through Bryce Canyon as the sun sets…”)

- Minimal instructions (“slow camera pan revealing side of parked car”)

Sometimes they work. Often they don’t. The same prompt will produce wildly different interpretations — and that’s where we hit a wall. Without the ability to lock the design, motion, or framing, Sora remains a talented, unpredictable assistant, not a dependable tool.

The Ideal Role of Sora in the Studio Workflow

Despite its quirks, I believe Sora (and models like it) are going to play a huge role in the future of transportation design — but only if it evolves into a system that respects intent and allows for constraint.

Here’s how it could evolve:

1. Design Lock Mode

When I input a reference image, I expect that exact design to appear — not an interpretation. We need the ability to “lock” form, color, lighting, and wheelbase.

2. Camera Path Control

Instead of writing shot descriptions, give us simple visual tools or preset arcs: pan left, tilt up, rotate 15° clockwise, dolly backward 10 meters. This is how real-world camera work happens — and it should be mirrored in AI.

3. Motion Physics That Respect Reality

No more floating cars, flickering tires, or rubbery reflections. Let me say: “Wheels spin naturally with speed. Body stays grounded.” That shouldn’t be optional.

4. Sequencing + Layering

Imagine layering multiple AI video elements: a base environment, a moving vehicle layer, a sky animation. Think After Effects meets Midjourney. This would open up serious flexibility.

5. Iteration Tools

Let us “lock and rerun” — preserving good outputs and tweaking only one variable at a time (e.g., camera path, lighting, background activity).

Conclusion: Sora as a Gateway, Not a Destination (Yet)

Right now, Sora is like the first digital sketchpad — magical, yes, but not quite replacing your clay model or Alias setup. It’s brilliant for moodboards, visual storytelling, and early presentation materials. But it’s not yet ready to visualize production concepts with the fidelity, consistency, and control that professional workflows demand.

Still, I believe it will get there. And when it does, it could become a key part of a designer’s toolkit — bridging the gap between concept, visualization, and even virtual review. It’s not just about pretty video. It’s about making design feel real sooner, faster, and with more emotion.

Until then, we’ll keep experimenting — and pushing it to get better. Because if there’s one thing designers know, it’s that greatness comes from iteration.